Why did you guys choose to use Fast Sigmoid function the way it was? #107

Replies: 1 comment

-

|

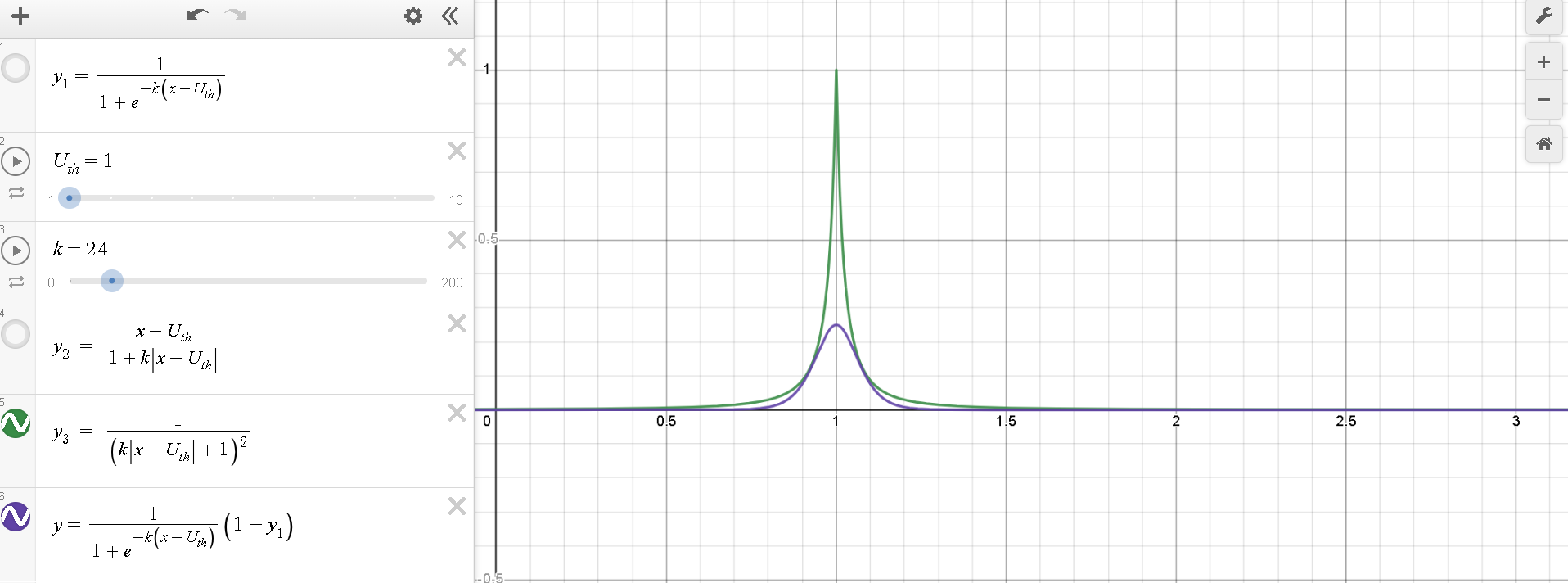

Thank you, this is a good question. I think the reason fast sigmoid is used is to avoid gradient vanishing because you can see the amplitude of them are significantly different in the plot. For BP, delta Wij will include a product of activation function and other elements. If the value of derivative of activation function is low, it may cause gradient vanishing ( https://arxiv.org/pdf/1901.09948) Additionally, the source code refers to "SuperSpike: Supervised Learning in Multilayer Spiking Neural Networks." Zenke mentioned that any functions peak at the spiking threshold should work. The purpose is to mimic spike. Lastly, fast sigmoid improves speed because we don't need to evaluate exp (https://pmc.ncbi.nlm.nih.gov/articles/PMC6118408/pdf/neco-30-1514.pdf). |

Beta Was this translation helpful? Give feedback.

Uh oh!

There was an error while loading. Please reload this page.

Uh oh!

There was an error while loading. Please reload this page.

-

For curiosity, I tested the derivative of the Fast Sigmoid vs the derivative of the Sigmoid as both are meant to approximate the Heaviside step function. The result is that the derivative of Fast Sigmoid resembles a lot more like a Dirac-Delta Impulse than the derivative of the Sigmoid. What does this impact to the learning of the SNN?

Beta Was this translation helpful? Give feedback.

All reactions